Games that are played over the network inevitably experience a delay, known as network latency or simply latency. As a result, every player is generally shown an outdated game world in real-time games. How outdated the displayed game world is depends on the respective latency. For this reason, the player must take their own latency into consideration in competitive games and, for example, in shooters, take into account not only the distance and bullet speed but also their own latency when aiming, and accordingly aim a little further in the assumed direction of the opponent. At least if there are no countermeasures such as lag compensation.

Latency is also the reason why, in the early 2000s in Germany, certain Internet providers that advertised Fastpath or deactivated Interleaving were particularly popular with gamers. In addition, ISDN was often superior to DSL in terms of latency, as ISDN does not have integrated error correction like Interleaving. I can still remember how jerky my gaming experience was in online matches on Quake 3 Arena. Especially when you consider that I lived in a village back then where there were no providers with “latency optimization”. I also lived with my parents, who would have had no sympathy for paying extra for lower latency. Last but not least, my siblings were further disruptive factors for low latency.

The reason for this anecdote is that Quake 3 Arena, as much as I like it, did not have lag compensation and therefore low latency brought considerable benefits to the player. It is important that we distinguish between client-side prediction and lag compensation. Client-side prediction, as the name suggests, runs solely on the client and attempts to create smoother transitions between server data, which I hope to write a separate article on at some point.

Lag Compensation

Lag compensation is a technique that attempts to compensate for players’ network latencies on the server side. To do this, the server always keeps a certain history of the game world so that it can always use the current version of the game world at the time of the player action for calculations. This should, who would have thought it, compensate for the latency/lag.

Basically, it works in such a way that every game client also informs the server of the point in time in the game world to which the player action relates for every player action. When evaluating the player action, the server thus undertakes small journeys through time and also adapts its saved versions of the game world retrospectively to the results of the player actions.

Example Scenario

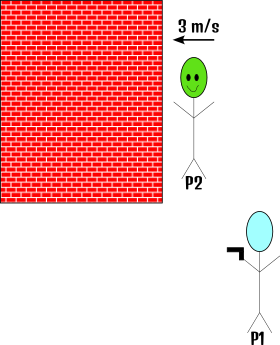

That actually sounds like a perfect solution, doesn’t it? Let’s take a closer look at various examples. All of the following examples take place within a first-person shooter. Player P1 has the head of the opposing character, controlled by player P2, in the crosshairs, while P2 is about to run at 3 m/s into cover 0.5 m away. To keep it as simple as possible, we use raycast weapons in the examples, which means that the projectile speed is infinitely high and therefore hits immediately. The server runs with a tick rate of 60, which means that it evaluates the received network messages every 17 ms. To give you a better idea of the example scenario, here is another visualization in the best programmer art 😉.

In order not to make this topic even more complicated than it already is, all other latencies (input latencies, rendering latencies, …) are ignored.

So that you can see the latencies directly in the scenarios, three points in time are specified after all actions. “Game world time” refers to the version of the game world that the server has saved. “Registration P1” and “Registration P2” refer to the times at which P1 and P2 have registered the adjustments to the game world.

Scenario 1: Player P1 and player P2 with similar latencies

Latency P1: 40 ms; Latency P2: 50 ms

- P1 pulls the trigger when P2’s head is in the center of the crosshairs. Game world time: 1003, Registration P1: 1043, Registration P2: 1053

- Server receives the message from P1 (Game world time: 1083) and waits until the next tick.

- Server begins evaluation at the next tick. To do this, it goes back to time 1003 for hit detection and calculates that P2 has been hit. This information is then sent to P1 and P2. Game world time: 1088, Registration P1: 1128, Registration P2: 1138

So far, so good. What does it look like for P2?

- P2 receives the hit information. For him, 85 ms have passed since P1 pulled the trigger. Since he is moving towards cover at 3 m/s, he has moved locally \(3\,\frac{m}{s} * 85\,\text{ms} = 0{.}255\,\text{m}\). He was therefore not yet in cover and could be hit.

- P2 will probably be annoyed because it was hit, but recognizes the hit as valid.

Scenario 2: Player P1 with significantly lower latency than player P2

Latency P1: 10 ms; Latency P2: 250 ms

P1 pulls the trigger when P2’s head is in the center of the crosshairs. Game world time: 1003, Registration P1: 1013, Registration P2: 1253

Server receives the message from P1 (Game world time: 1023) and waits until the next tick.

Server begins evaluation at the next tick. To do this, it goes back to time 1003 for hit detection and notices that no information is yet available from P2 for this time, as it is lagging behind due to its high latency. Game world time: 1037, Registration P1: 1047, Registration P2: 1287

Now there are two possibilities:

- the server waits until P2 has arrived at time 1003 to then start the calculations. This means that the server has to wait another 250 ms, which P2 can of course use for further entries.

- The server then determines during its calculations that P2 has not been hit.

- As P2 is on his way to cover, he runs in this direction the whole time. When the server sent game world time 1003 to all players, player P2 had only just seen game world time 753 and therefore the server could only partially determine the correct position of P2 in the game world at time 1003. As a result, she can still travel \(3\, \frac{m}{s} * 250\, \text{ms} = 0{.}75\, \text{m}\) up to time 1003 and is therefore in cover.

- P1 does not receive any hit feedback, as P2 has long since moved away from its position and is in cover. This circumstance is often referred to as “ping armor” and should be taken into account by P1 for future shots. 2 The server interpolates the previous information.

- The server arrives at a similar interpolation result as P1 has found and therefore registers a hit.

Scenario 3: Player P1 with significantly higher latency than player P2

Latency P1: 250 ms; Latency P2: 10 ms

- P1 pulls the trigger when P2’s head is in the center of the crosshairs. Game world time: 1003, Registration P1: 1253, Registration P2: 1013

- Server receives the message from P1 (Game world time: 1503) and waits until the next tick.

- Server begins evaluation at the next tick. To do this, it goes back to time 1003 for hit detection and calculates that P2 has been hit. Time point game world: 1513, Registration P1: 1763, Registration P2: 1523

So far, so good. What does it look like for P2?

- P2 receives the hit information. For him, 510 ms have passed since P1 pulled the trigger. Since he is moving towards cover at 3 m/s, he was able to move locally \(3\, \frac{m}{s} * 510\, \text{ms} = 1{.}53\, \text{m}\). He was therefore completely in cover locally and could not actually have been hit from his point of view.

- P2 will probably be very annoyed because he was hit even though he was already in cover. This phenomenon is also known as “bullets bending around corners”. Does this remind anyone else of the movie Wanted (2008)?

Bullets bending around corners in the movie Wanted, Universal Pictures 2008

Conclusion

Lag compensation per se is an interesting technique that, in my opinion, can improve the gaming experience for all players in fast-paced competitive PvP games, such as shooters, if used under the right conditions. This allows players to concentrate on the actual game mechanics without having to worry about network latency. However, it is essential that the respective player latencies are not too far apart, as this puts players with low latencies at a disadvantage. Finally, lag compensation was developed to compensate for latency differences.

Ultimately, it remains a game design decision. As is so often the case, you have to weigh up the pros and cons and consider whether the increased complexity really adds value for the players.

Further reading

There are of course many other articles on the subject. The ones I have used as sources for this article are:

- Fast-Paced Multiplayer (Part IV): Lag Compensation

- Latency Compensating Methods in Client/Server In-game Protocol Design and Optimization

- What Every Programmer Needs To Know About Game Networking

- The State of Hit Registration in Hunt

- Source Multiplayer Networking - Valve Developer Community

- Latency Compensating Methods in Client/Server In-game Protocol Design and Optimization